Good morning!

- Tim Cook, every WWDC

It's time for more justification of a big tech purchase!

For the past few years, our schedule on the day of Apple's WWDC keynote has been relatively the same - in the early morning, to strap on the Valve Index and join in with friends in the Closing Ceremonies to that year's Furality and then attempt to catch the WWDC keynote live unless something came up.

In 2023, we watched WWDC live in VRChat, remarking just how bad the Vision Pro's personas looked at that time to the fursonas we'd been petting in VR just hours earlier.

In 2024, it fell during our scheduled time to watch cartoons and exercise with friends, and that took priority over having hot takes.

In 2025, the something that came up was Furality delaying the close of the entire con by a day due to an 8+ hour upstream provider outage, and so us trying to get some sleep during the day to stay up until 5am the following morning.

Even with using the Vision Pro for most regular VRChat sessions via ALVR, there's been a couple of dealbreakers that stop us for using it for big events like Furality. But the Index is also getting... a bit long in the tooth. It's bulky. It hurts our face if we use it too long. The screen doesn't feel so crisp, and ours has had a persistent dead pixel since the day we got it (used). And while modding it to add things like eyetracking is possible, it seemed like the sort of thing we'd probably fuck up.

When the Bigscreen Beyond 2e was announced in March, we decided to take a wait and see approach; to see if either Apple would fix the dealbreakers within their WWDC keynote or if Valve suddenly decide to announce the Deckard.

TL;DR summary of the following: it's a partial success at least.

visionOS 26

visionOS 26 follows Apple's renumbering scheme common to all OSes this year. Since we're very rarely in the "potentially useful OS reviews" camp - there are a lot of people with old iPhones/iPads/Macs to stick the betas on to, but not that many Vision Pros, it's probably worth going over what we've currently been using.

Widgets

Widgets are... well, they're widgets. They automatically stick to walls, they persist when the headset is rebooted. They're slick in their implementation, and can help with some ambient elements that would maybe be otherwise missed.

Also maybe we shouldn't have recorded this while on the exercise bike.

In beta 1, it refused to display our calendar, but that got fixed in beta 2.

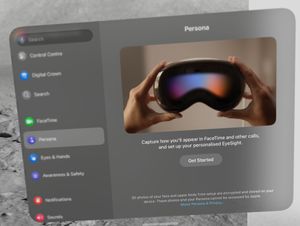

Spatial Personas

The improved Spatial Personas looked great in Apple's slick demo video. People, including Adam Savage, seem enamoured by them.

But in the 10 months of owning a Vision Pro, we have never set a Persona up.

Part of that is simply a lack of anyone to call to be impressed with how a Spatial FaceTime call could look.

But also, we are a collection of gender dysphoric creatures piloting around a meat suit. There are many things about our physical body that we have accepted - our broad shoulders, as an example, which might make it difficult to find cute outfits, but mean we can put some extra strength into hugging [1].

But most of those things we haven't accepted are from the neck up.

Our voice is wrong, even if it is getting better.

Our face is Wrong.

Our ability to grow shitty facial hair is Wrong.

Our hair not growing the way it used to is Wrong.

Our lack of snout is Wrong.

Why would we want to digitally recreate the face we identify least with, to use that rather than an animoji, memoji, or ourselves?

Spatial Scenes

Spatial Scenes is Apple's branding for the upgraded version of Spatial Photos. These can be captured spatially, or significantly more likely, generated from regular 2D photos. While the previous version provided more of a tilt shift effect that was too subtle to really see outside of the Vision Pro... that's not true of the new version.

This is our old dog, Heidi (~2005-2019). This photo of her is from a 2008 family holiday, captured on a cheap digital camera of the era. No fancy modern phone tricks, no LIDAR.

And it almost feels like I could pet her.

The effect doesn't always work. Sometimes it breaks spectacularly and assumes a midground element is part of the far background, or horribly smears it backwards. Sometimes you can see the artefacts from it positioning on the wrong layer.

But when it does work, it's very impressive.

Spatial Browsing

This equates to a variant on Safari's reader mode, with text floating in front of a cloud that changes colour based on the last image scrolled past. If it can, it will also convert the image into a spatial scene too.

We rarely remember reader mode exists in general on any device, being honest. The spatial scene conversion is neat to see for compatible photos.

In beta 1, the background cloud was way, way too aggressive about changing colour in some circumstances. In beta 2, the transitions at least have some time fading out, though going from dark to light or vice versa can still be odd.

You can now use your eyes alone to scroll the page (and other things too, like the menu in the settings app). This is kind of finicky, and I feel like in most circumstances, using a hand would still be preferable to gaze alone.

why go beyond

As we said, there's some dealbreakers we've found over the past 10 months when it comes to Furality and some other uses that cause us to go back to a SteamVR based headset.

dealbreaker 1 - you are the controller

We actually really like using handtracking for normal VRChat sessions. It has its problems, but having your hands there unobstructed... it's nice. We don't often get phantom sense within VR despite experiencing other phantom limbs sometimes, but the closest we come is when we're resting our hands on someone - there's a substantial difference between it just being our hands and being hyperaware of the piece of plastic that's restricting their movement - particularly with the grips we have on the Index Knuckles to make them better fit our wrists.

However, most of our regular sessions take place in relatively flat or easy to navigate worlds - handtracking isn't always great at moving through them, but it works. We tend to stay in approximately the same area for a while.

Furality makes wide open spaces with a lot of things to explore. The Dealer's Den has us changing avatars and subsequently menu-ing frequently, and there's only so much handtracking can do with this kind of situation.

Apple did kind of mitigate this with announcing support for the PSVR2 Sense controllers - support which was already added to the beta version of ALVR shortly after the keynote.

The problem is that Sony don't currently sell the controllers separately, and we sold our PS5 after Ratchet & Clank: Rift Apart's PC port was announced because that made it clear that anything first party we were interested in would eventually make it elsewhere.

There is a PC adapter for the PSVR2, a headset which on paper would do some of what we want, but...

dealbreaker 2 - open your eyes (and face)

The Apple Vision Pro has some of the best eye and face tracking in the business - which makes sense when Apple's ARKit is one of the big standards in setting up face tracking for avatars.

Unfortunately, only Apple is allowed to use the eye and face tracking on the Vision Pro. My hope for that changing at WWDC 2024 didn't happen, and nor did it happen at WWDC 2025.

I can kind of understand it from a "let's not give Facebook access to gaze data" perspective - but at the same time, no matter how many consent dialogues it takes, we would actually like to give ALVR (and VRChat themselves, should a native port happen) that info.

The PSVR2 has the same problem; eye tracking works on the PS5, but it is not part of the PC drivers. Modding is underway, but as far as I can tell there is no public release of anything yet.

My suspicion is upcoming Android XR headsets won't have this limitation, and maybe that'll cause Apple to reconsider. For now, I feel like a good PC native solution with eyetracking is needed to convince developers (and/or Valve) to add native support for eyetracking, including foveated rendering.

The Bigscreen may only have eye tracking and not face tracking, but that's an easy fix now thanks to Project Babble.

dealbreaker 3 - fullbody

This one is realistically one I didn't expect Apple to do anything about, but it still presents a problem.

We end up in VR a lot at unsociable hours; the curse of being in the UK and most friends being in the US. That means generally wanting things to be as simple as possible.

These days, that often means just not using full body trackers, but for Furality, we need to be able to wiggle properly. And for that, there's still no simpler solution than SteamVR trackers - IMU based trackers need calibration before each session, a solution like the Kinect does not work well in this room, and a solution like adding an extra tracker to the headset to then run OVR Space Calibrator would cause other problems.

future improvements

So, the Beyond should fix most of our problems with the Index, and by being SteamVR based, it doesn't have the dealbreakers.

Beyond the likely necessity of a GPU upgrade, we'd like to replace the Index Knuckles at some point - with our Index kit being used, the analogue stick on the right one has been broken for a while anyway.

The key question is just... with what. 6 years on and it doesn't seem like there's a meaningfully better option, if what you want is precise finger tracking while maintaining buttons.

So... all this, and we're still back where we were in 2022.

Still waiting for Deckard to fix part of the industry.

Footnotes

Descriptions of Undertale/Deltarune's Toriel as "being built like a fridge" have also helped a lot. ↩︎